Research-Based Teaching

Integrating research infrastructures into teaching

Our needs analysis revealed that linguistics and language-related degree programmes seldom include language data standards and research data repositories in their learning outcomes. A survey of lecturers from linguistics and language-related disciplines also exposed a number of challenges in using repositories for language data discovery, reuse and archiving. Against this backdrop, the present guide shows how teachers and trainers can leverage the CLARIN research infrastructure to help students enhance their data collection, processing and analysis, and archiving skills. By integrating research infrastructures into teaching, educators can bridge the gap between theoretical knowledge and practical aspects of linguistic research data management, equipping students with the necessary skills and competences to thrive in the evolving landscape of open science and data-driven research.

The motivation for writing this guide arises from several factors identified within UPSKILLS’s remit. The lecturers who participated in our needs analysis expressed interest in integrating research infrastructure data and services into their teaching. However, they faced some challenges, such as difficulty in identifying optimal resources and tools to explore specific linguistic aspects, lack of resources for certain languages, insufficient documentation and tutorials on how to effectively incorporate resources in the classroom, and the absence of discipline-specific best practices and guidelines. An additional questionnaire conducted as part of Intellectual Output 2 revealed some technical, financial, and administrative challenges that both lecturers and students encountered when using repositories for language data discovery, reuse and archiving. Furthermore, students’ low level of digital literacy was cited as a barrier to using infrastructure and tools in data collection and archiving.

At the same time, informal talks with the UPSKILLS consortium partners and lecturers outside the consortium revealed a lack of general awareness about the wealth of language resources and knowledge available through the CLARIN research infrastructure and national consortia in their respective countries. This lack of general awareness about the existence and added value of research infrastructures has also been acknowledged at the European level by initiatives such as EOSC and FAIRsFAIR. A university landscape analysis conducted by the EOSC Skills and Training Working Group in 2019 identified a low awareness among students and researchers of research data management (RDM) practices, a lack of skills and insufficient training opportunities at the bachelor, master and doctoral levels (Stoy et al., 2020). Although things have improved gradually at the PhD level, there is evidence of slow integration of FAIR data principles, open science and data-related topics at the Bachelor and Master levels. Therefore, the FAIR Competence Framework for Higher Education proposes a set of core competences for FAIR data education that universities can use to design and integrate RDM and FAIR-data-related skills in their curricula and programmes (Demchenko et al., 2021). Students, scholars, teachers and researchers from all disciplines are encouraged to acquire fundamental skills for open science, including the ability to effectively interact with federated research infrastructures and open science tools for collaborative research. To further support the integration of these skills into the university curricula, FAIRsFAIR published an adoption handbook “How to be FAIR with your data – A teaching and training handbook for higher education institutions’’ (Engelhardt et al., 2022), which contains ready-made lessons plans on a variety of topics, including the use of repositories, data creation and reuse. We hope this CLARIN guide and learning content is a useful addition to these European initiatives but from a discipline-specific angle.

As Gledic et al. (2021) reveal, employers in the digital business sector increasingly seek to hire graduates from language-related programmes with data-oriented and research-oriented skills. Such skills have become even more important as new job profiles continue open up to language and linguistic graduates in the age of AI and ChatGPT revolution, such as computational linguists, machine translation specialists, data curators, data stewards, data annotators, knowledge engineers and terminologists.

In light of this current context, this guide shows how teachers and trainers can leverage the CLARIN infrastructure to help students enhance their data collection, processing and analysis, and archiving skills. By integrating research infrastructures into teaching, educators can bridge the gap between theoretical knowledge and practical aspects of linguistic research data management, equipping students with the necessary skills and competences to thrive in the evolving landscape of open science and data-driven research.

| 👉 PRO-TIP: See the interactive website that the consortium partners from the University of Bologna developed to help students identify the skills they need for the job profiles they targeted. |

References:

- Demchenko, Yuri, Lennart Stoy, Claudia Engelhardt, and Vinciane Gaillard. ‘D7.3 FAIR Competence Framework for Higher Education (Data Stewardship Professional Competence Framework)’, 24 February 2021. DOI: 10.5281/zenodo.5361917.

- Engelhardt, C., K. Biernacka, A. Coffey, R. Cornet, A. Danciu … B. Zhou (2022). How to be FAIR with your data. A teaching and training handbook for higher education institutions (V1.2.1) [Computer software]. Zenodo. DOI: 10.5281/zenodo.6674301

- Gledić, J., M. Đukanović, M. Miličević Petrović, I. van der Lek & S. Assimakopoulos. (2021). Survey of curricula: Linguistics and language-related degrees in Europe. Zenodo. DOI: 10.5281/zenodo.5030861

- Stoy, L., B. Saenen, J. Davidson, C. Engelhardt & V. Gaillard. (2020). FAIR in European Higher Education (1.0). Zenodo. DOI: 10.5281/zenodo.5361815

This guide complements the Research-Based Teaching: Guidelines and Best Practices by providing a practical introduction to the CLARIN research infrastructure for lecturers, teachers, trainers and curriculum designers interested in integrating learning resources (e.g. corpora), language technology tools and research data repositories in their curricula, summer schools, and workshops. Along with this guide, an accompanying learning block titled Introduction to Language Data: Standards and Repositories is available on Moodle as part of Task 3.2. UPSKILLS Learning Content. This learning block consists of a modular structure that provides lecturers with a comprehensive collection of learning resources and activities they can use to educate themselves and as reference materials for the classroom. Each major topic in this guide links to relevant learning resources on Moodle to help the teachers identify what they need.

Teachers and trainers can use the guide in the following ways:

- To identify CLARIN centres of expertise, research data repositories, language resources (mainly corpora), services and natural language processing (NLP) tools that they can use to enhance their research and teaching.

- To identify learning content and activities in the accompanying course on Moodle, which they can use in two ways: (1) further educate themselves on a specific topic and (2) repurpose for classroom use to help students improve their data discovery, handling, sharing and archiving skills.

|

👉 PRO-TIPS:

|

Teachers and trainers who already use the CLARIN infrastructure for language research or research data management in their courses are encouraged to share and contribute to future versions of this guide with their teaching and learning activities examples.

While aimed primarily at teachers and trainers in linguistics and language-related fields, this guide can also benefit anyone in humanities and social sciences disciplines, including curriculum designers, policymakers, librarians, data stewards, and industry professionals seeking to use the infrastructure for research and training.

This work in UPSKILLS aligns with international initiatives like the European Open Science Cloud (EOSC) and FAIRisFAIR, which promote the adoption of open science and research data management based on the FAIR guiding principles (Wilkinson et al., 2016) for scientific data management across all domains, disciplines and levels.

Please note this guide does not aim to teach how to design, plan and evaluate a course. To this effect, teachers, instructors and curriculum designers may benefit from other guidelines developed in the project.

|

👉 PRO-TIPS:

|

*A PDF version of this guide is available for download in the Zenodo repository.

** Throughout this guide, you will encounter many technical terms. A glossary of terms can be found in our Moodle learning block, and it can also be downloaded from UPSKILLS Glossary – Introduction to Language Data – Standards and Repositories.

References:

- Gledić, J., A. Assimakopoulos, I. Buchberger, J. Budimirović, M. Đukanović, T. Kraš, M. Podboj, N. Soldatić & M. Vella, Michela. (2021). UPSKILLS guidelines for Learning Content Creation. Zenodo. DOI: 10.5281/zenodo.8302296

- Simonović, M, I. van der Lek, D. Fišer & B. Arsenijević, B. (2021). Guidelines for the students’ projects and research reporting formats. Zenodo. DOI: 10.5281/zenodo.8297430

- Simonović, M., B. Arsenijević, I. van der Lek, S. Assimakopoulos, L. ten Bosch, D. Fišer, T. Kraš, P. Marty, M. Miličević Petrović, S. Milosavljević, M. Tanti, L. van der Plas, M. Pallottino, G. Puskas & T. Samardžić. (2023). Research-based teaching: Guidelines and best practices. Zenodo. DOI: 10.5281/zenodo.8176220

- Wilkinson, M. D., M. Dumontier, I.J. Aalbersberg, G. Appleton, M. Axton, A. Baak, … B. Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3, 160018–160018. DOI: 10.1038/sdata.2016.18

The European Commission defines research infrastructures as:

Facilities, resources and services used by the science community to conduct research and foster innovation. They include major scientific equipment, resources such as collections, archives or scientific data, e-infrastructures such as data and computing systems, and communication networks. They can be used beyond research, e.g. for education or public services and they may be single-sited, distributed, or virtual (European Commission, 2016).

Research infrastructures (RIs) are based on national consortia of research institutes, universities, libraries, museums and archives and support researchers in managing the “data lifecycle” in their research projects by providing guidelines for the creation of data management plans and formats to facilitate long-term preservation, access and reuse of research data in the context of Open Science, Open Access and FAIR data principles. The availability of open research data offers valuable resources (e.g. digital text collections, corpora) for students and educators to explore and analyse real-world datasets and it helps them engage in collaborative research across various disciplines. The Helsinki Digital Humanities Hackathon represents a great example of interdisciplinary collaboration, data-driven research, and teaching. Many educational institutions and organisations across Europe have recognised the importance of open science and have incorporated training programs and courses to educate students and researchers about open science principles.

| 👉 PRO-TIP: To help students understand the benefits of open science, encourage them to play the Open Up Your Research Game developed by the University of Zurich. The game scenario centres around a PhD candidate who needs to choose between adopting an open science or a traditional approach to conducting research. |

Since 2002, about 50 European research infrastructures (RIs) have been set up under the auspices of the European Strategy Forum on Research Infrastructures (ESFRI) in a wide variety of disciplines: e-Infrastructures, Energy, Environment, Health and Food, Physical Sciences & Engineering, and Social and Cultural Innovation. Examples of research infrastructures in the Social and Cultural Innovation sector are CESSDA, CLARIN ERIC, DARIAH-EU, E-RHIS, ESS, and SHARE (for more details, see the ESFRI Roadmap). These European RIs aim to provide open, fair and transparent access to their facilities and services for researchers, scholars and students across Europe and beyond. Moreover, in 2018, the European Commission launched the European Open Science Cloud (EOSC) initiative, which aims to aggregate the services provided by these research infrastructures into one open virtual environment that shares scientific data across borders and disciplines.

| 👉 PRO-TIP: To learn how the CLARIN services are integrated into the EOSC platform, watch this video: A Study on the Use of Nouns by Female and Male Members of Parliament. Teachers can replicate this small study with their MA or PhD students using this tutorial from the CLARIN website. |

The two most relevant research infrastructures in digital humanities are the CLARIN ERIC and DARIAH-EU infrastructures. While DARIAH-EU has a broader focus on the arts and humanities disciplines, facilitating knowledge exchange and collaboration through working groups, CLARIN ERIC focuses on the collection, management and long-term archiving of language resources and technologies in social sciences and humanities. These two infrastructures collaborate closely on various topics related to training and education through the Digital Humanities Course Registry working group, and by supporting and co-organising summer schools and workshops in digital humanities. This guide will mainly focus on CLARIN and how teachers can use it for language and linguistic research.

Besides CLARIN, there are also other infrastructures used to discuss, host and disseminate language resources and technologies, such as ELRC-SHARE, European Language Resources Association (ELRA), European Language Grid (ELG), and META-SHARE. For more details and the state-of-the-art on language resources and technology developments, refer to Agerri et al. (2023).

|

📖 Teaching & Learning Resources on Moodle To give students a general introduction to research infrastructures for language resources and technologies, you can use and combine the following learning activities on Moodle, Introduction to Language Data: Standards and Repositories:

Note: The numbers of the Moodle activities are not chronological because the content has been combined from several units to create learning paths. |

References:

- Agerri, R., E. Agirre, I. Aldabe, N. Aranberri, J.M. Arriola, A. Atutxa, G. Azkune, J.A. Campos, A. Casillas, … A. Soroa. (2023). State-of-the-Art in language technology and language-centric Artificial Intelligence. In Rehm, G., Way, A. (Eds) European Language Equality: Cognitive Technologies. Cham: Springer.

- European Commission, Directorate-General for Research and Innovation. (2016) European charter of access for research infrastructures: principles and guidelines for access and related services. Publications Office. DOI: https://data.europa.eu/doi/10.2777/524573

To ensure that language resources and datasets can be found, are accessible, interoperable and reusable, researchers are recommended to deposit language resources, tools and associated metadata in a FAIR and trustworthy research data repository. FAIR is a set of guiding principles for scientific data management developed by Wilkinson et al. (2016) to help improve the findability, accessibility, interoperability and reuse of digital assets. These principles have started to be adopted for data, software, and even training materials. FAIR repositories help researchers make their language resources findable and accessible for a long time by assigning persistent identifiers (PID), such as DOI or handle, which will help retrieve a resource even when it has been moved to another server or domain. Furthermore, repositories provide a fixed set of metadata elements (or schema) to describe the deposited language resources consistently, e.g. using Dublin Core, OLAC, or CMDI. A free metadata search engine (e.g. Virtual Language Observatory or OLAC) may be included in the infrastructure to allow users to search and locate language resources useful for a specific (research project). In addition, repositories use standardised authentication and authorisation procedures (e.g. single-sign-on) and support different licence models to enable controlled access permissions for different user groups (i.e. use a resource for academic or commercial purposes). Finally, repositories promote interoperability through open and standardised formats and facilitate reusability of the language resources and datasets by providing guidelines and best practices for research data management. To ensure that a research data repository is trustworthy, it must undergo a quality assessment procedure and get a seal of quality, e.g. CoreTrustSeal, CLARIN B-Centre Certificate, ISO Standard 16363:2012. Data repositories can also serve as backups during rare but devastating events where data is lost to the researcher and must be retrieved.

| 👉 PRO-TIP: Go to the CLARIN Centre Registry and see if you can find a CoreTrustSeal-certified centre in your country. |

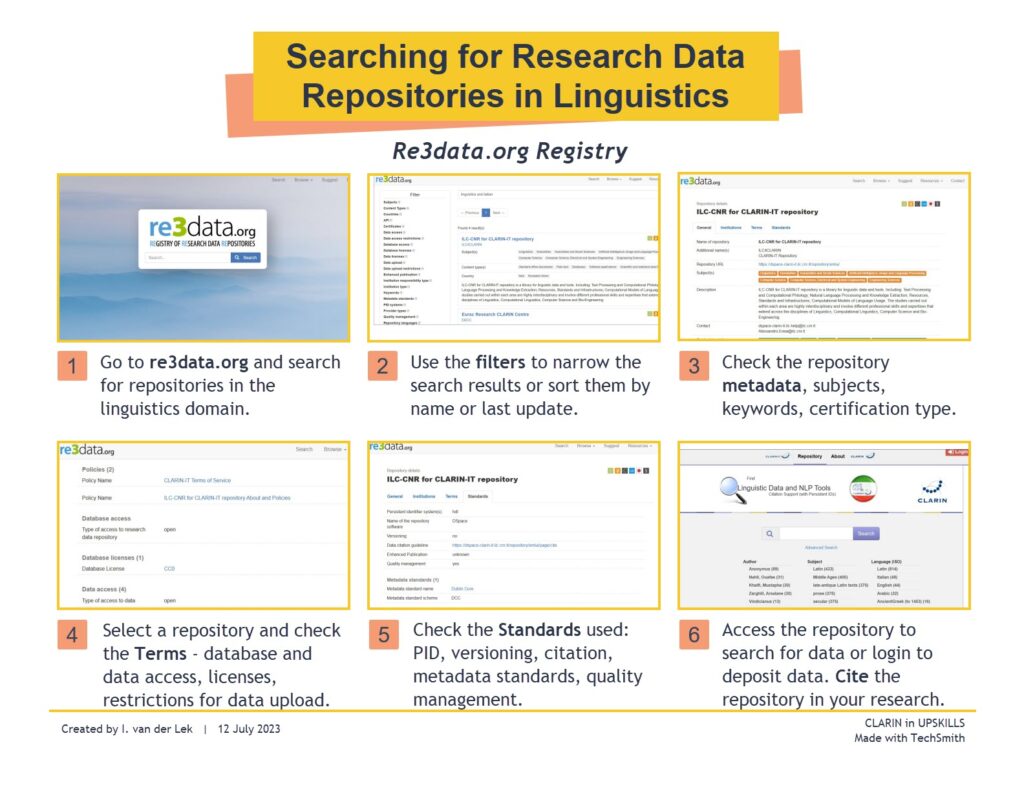

For teachers and students who have never used research data repositories in research or teaching, the re3data repository registry is a good platform to start exploring available linguistic data repositories. This cross-disciplinary directory contains the metadata of over 2000 repositories in different disciplines. Each repository entry is described with a consistent set of metadata and it can be cited. Here is a quick guide on how to use the registry:

Searching for linguistic data repositories in the re3org. repository registry

When searching for a repository to use for either linguistic research or for sharing and archiving research outputs, teachers could use the re3data registry to teach students how to evaluate and compare different linguistic research data repositories using the checklist that follows:

-

- What types of policies, documentation and guidelines does the repository offer?

- Is the repository certified (e.g. CoreTrust Seal or CLARIN certification)?

- Can users search for and access the research data in open access, or are they required to register?

- Are registration and membership required to be able to upload one’s research data to the repository?

- What type of licences does the repository support? (e.g. Creative Commons Licences)

- Does it support common metadata standards and formats (e.g. Dublin Core)?

- Does it support citation standards and attribution (e.g. implementation of persistent identifiers, such as handles and/or DOIs)?

- Does the repository offer documentation, guidelines and tutorials for finding and using research data?

- Does the repository support the integration of language resources, tools and services in educational settings?

- Does the repository comply with ethical guidelines for data sharing, especially when dealing with sensitive or personal information?

Through the questionnaire in Annex B (please see the PDF version for details) and testimonials of the lecturers participating in the UPSKILLS projects and events, we have collected a few examples of repositories used for teaching purposes in different language-related disciplines. Student teaching assistants who start teaching may find them useful as these repositories provide valuable resources for both teaching and research purposes.

The IRIS database, the ReLDI repository for data collection instruments, or the SLA Speech Tools repository are often used to search and download learning activities to help students improve their pronunciation in the classroom. The CMU-TalkBank repositories, which give access to well-documented CHILDES corpora are used to teach students how to analyse child language corpora. For example, the corpora have been widely used in undergraduate courses in language development to create handouts containing sample transcripts that students need to analyse and answer specific questions about language, or make more thorough analysis at the phonological, morphosyntax and discourse levels. Other teachers use the CHAT transcription program to teach students how to correct transcription errors or collect, record and transcribe child language data themselves. For more examples, please refer to the CHILDES Teaching Resources. For parallel corpora, terminology databases or research tools for the study of translated texts, take a closer look at the CLARIN repositories, EuroParl, ELRA-ELDA catalogues, META-SHARE, EuroTermBank, and TAPoR.For example, these repositories can be used to find and download parallel corpora in .tmx format to use for a translation assignment in the Computer-Aided Translation classroom, extract domain-specific terminology to create a bilingual glossary or use the corpus to train a machine translation engine in AI/Machine Learning programmes. Furthermore, many corpora available in CLARIN are directly integrated into concordancers, such as NoSketch Engine, KonText, Corpuscle, and Korp, which can be freely used in the classroom for linguistic research. To search for speech data and tools, the following repositories may be useful: the Bavarian Archive for Speech Signals (BAS), Speech Data & Technology platform, Open SLR,, and the Linguistic Data Consortium. The BAS repository shares speech resources of contemporary German and detailed information about what standards to use when compiling speech corpora and templates for informed consent forms for speakers participating in a research project. Moreover, the Speech Data and Tech platform offers a Transcription Portal for automatic transcription in English, German, Dutch and Italian. The ELAR (Endangered Language Archives) repository provides multimedia collections of endangered languages from all over the world, which can be browsed free of charge. For more advanced use of the resources, registration is needed. The archive also provides documentation, guidelines and training on research data management, using ELAN to create, transcribe and translate files and Lameta to create consistent quality metadata.blank

First and Second Language Acquisition

Translation Studies and Translation Technology Research

Language and Speech Technologies

Language Documentation

After identifying a suitable repository, teachers are advised to check whether it offers integrated, easy-to-use services and tools that can be used in the classroom and whether the repository has an active user community and provides training and support to its users, e.g.

-

- Does the repository provide training materials, workshops and webinars to teachers and trainers on how to use the services in education and training?

- Does the repository have an active user community? Do other colleagues use it in your field for research or teaching?

- Would the repository provide support if you were to develop a language resource with your students as part of a research project?

Once teachers become familiar with research data repositories and their services, they can help students choose suitable repositories for their projects, data type and research goals.

|

📖 Teaching and Learning Resources on Moodle To introduce students to research data repositories and the FAIR data principles, the following teaching resources can be used: Introduction to Language Data: Standards and Repositories

|

References:

- Wilkinson, M. D., M. Dumontier, I.J. Aalbersberg, G. Appleton, M. Axton, A. Baak, … B. Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3, 160018–160018. DOI: 10.1038/sdata.2016.18

After a short overview of the current research infrastructure landscape, this section introduces the CLARIN research infrastructure, an established European Research Infrastructures for “Common Language Resources and Technology Infrastructure.” CLARIN offers online access to an extensive range of written, spoken, or multimodal language resources, which can be used for research, training and education, and developing language technology applications. For an overview of the state-of-the-art in language technology (LT) development, refer to Agerri et al. (2023).

The term linguistic resource refers to (usually large) sets of language data and descriptions in machine-readable form, to be used in building, improving, or evaluating natural language (NL) and speech algorithms or systems. Examples of linguistic resources are written and spoken corpora, lexical databases, grammars, and terminologies, although the term may be extended to include basic software tools for the preparation, collection, management, or use of other resources. (Godfrey & Zampolli, 1997, p. 441)

The infrastructure operates through a distributed network of centres and services, allowing academic users from various fields, particularly in the humanities and social sciences, to use integrated applications to discover, explore, exploit, annotate, analyse or combine language datasets to answer new research questions. To promote accessibility and usability, all CLARIN repositories and services adhere to the Open Science and FAIR data principles (Wilkinson et al., 2016), making the deposited language data findable, accessible, interoperable and reusable.

If have never used the CLARIN infrastructure in research and/or teaching, you may want to watch this short introductory video with your students:

Member countries contribute to the ERIC financially and in kind, for instance, by hosting a CLARIN centre. Researchers, students and teachers from the member countries have access to several central core services and opportunities, which will be showcased throughout this guide. Users from non-member countries (e.g. Serbia, Slovakia, Malta, USA) can also access and explore all the central services and the metadata of the language resources and tools in the repositories freely, without having to log in.

| 👉 PRO-TIP: Check the list of participating consortia to learn if your country is a member of CLARIN. If your country is not a member of CLARIN, but you are interested in using resources with restricted access or depositing language resources in a CLARIN repository, please contact the central CLARIN office at [email protected]. |

References:

- Agerri, R., E. Agirre, I. Aldabe, N. Aranberri, J.M. Arriola, A. Atutxa, G. Azkune, J.A. Campos, A. Casillas, … A. Soroa. (2023). State-of-the-Art in language technology and language-centric Artificial Intelligence. In Rehm, G., Way, A. (Eds) European Language Equality: Cognitive Technologies. Cham: Springer.

- Godfrey, J.J. & A. Zampolli. (1997). Language resources. In A. Zampolli & G. Battista Varile (Eds) Survey of the State of the Art in Human Language Technology. Linguistica Computazionale, XII-XIII, pp. pages 381–384. Pisa: Giardini Editori e Stampatori (also Cambridge University Press)

- Wilkinson, M. D., M. Dumontier, I.J. Aalbersberg, G. Appleton, M. Axton, A. Baak, … B. Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3, 160018–160018. DOI: 10.1038/sdata.2016.18

The technical infrastructure ensures that academic users in all participating countries can discover and use the language resources made available and hosted by the various local data centres through a single sign-on access using a federated identity (i.e. your university credentials or your CLARIN account).

-

- All users can freely explore the CLARIN core services to search for language resources (data and tools) and expertise on specific language research and documentation topics.

- Due to license restrictions, some resources and services are only available for academic use. To access these resources, login is required through the CLARIN Service Provider Federations, using your institutional or CLARIN website credentials.

- If your university or academic institute is not listed in the list of organisations, you can request a CLARIN account here.

If help is required to access specific corpora, please check the articles on the CLARIN Knowledge Base.

|

📖 Teaching and Learning Resources on Moodle Introduction to Language Data: Standards and Repositories: To introduce students to the CLARIN research infrastructure, the following learning content from Moodle can be used: Presentations:

Assignment:

|

|

👉 PRO-TIP: To learn more about CLARIN and its history, we recommend these two articles from the CLARIN anniversary book:

|

This section gives an overview of the national CLARIN Knowledge Centres (K-centres), which the UPSKILLS consortium partners and educators may benefit from. These knowledge centres provide expertise, guidance and training on linguistic topics, data types and tools available via the infrastructure, language processing and linguistic research data management.

Use the keyword-based search or the direct links below to locate those K-centres with expertise in language resources and methods that might be relevant to your teaching and research area:

-

- Individual languages (e.g. Danish, Czech, Portuguese), language families (e.g. South Slavic) or groups of languages (e.g. morphologically rich languages, the languages of Sweden)

- Written text and modalities other than written text (e.g. spoken language, sign language)

- Linguistic topics (e.g. language diversity, language learning, diachronic studies)

- Language processing topics (e.g. speech analysis, building treebanks, machine translation)

- Data types other than corpora (e.g. lexical data, word nets, terminology banks)

- Using or processing families of language data that will exist for most languages (e.g. newspapers, parliamentary records, oral history)

- Generic methods and issues (e.g. data management, ethics, intellectual property rights, digitalisation of texts using optical character recognition technology)

Below, we highlight a few CLARIN K-Centres located in the countries of the UPSKILLS consortium partners to help raise awareness about the expertise of these centres and their added value for research and teaching. A full overview of all the CLARIN centres can be found here.

In Austria, there are two knowledge centres: In Germany, SAW Leipzig, a Text + / CLARIN Centre, focuses on preserving language and lexical resources for underrepresented languages and offers various research-based applications to explore them. For example, the Vocabulary Portal gives access to over 30 million sentences of German-language newspaper corpora crawled from the Web. In addition, the centre offers a corpora portal that offers a search interface to more than 1000 corpus-based monolingual dictionaries in 293 languages. The corpora can be downloaded. The platform can be used to search for words with similar context, examples of use, and neighbour cooccurrences and visualise the relations in a word graph. The CLARIN Knowledge Centre for Computer-Mediated Communication and Social Media corpora provides researchers and students with knowledge, support and training for developing and managing CMC corpora. More specifically, the centre provides FAIR guidelines on data management, such as using standards and formats, and advice on legal and ethical issues. Researchers, teachers and students can contact the centre via their helpdesk. More information about CMC corpora and how to work with this type of corpora is provided in 4.1.3.4. Computer-Mediated Corpora. ILC4CLARIN.IT offers services for browsing and querying corpora, file conversion, term extraction, lexical editing and text annotation, mainly for Italian languages. Teachers of syntax and morphology can use language resources and training materials from INESS, the Norwegian Infrastructure for the Exploration of Syntax and Semantics. This centre is part of the CLARINO Bergen Centre and the CLARIN Knowledge Centre for Treebanking. It provides access to treebanks that are databases of syntactically and semantically annotated sentences. The platform is language-independent and can be used for building, accessing, searching and visualising treebanks. Data from INESS has also been used in a Master’s course on computational language models to show how empirical corpus data can strengthen or challenge hypotheses about grammar. Students quickly learn to use the system in projects for term papers and master’s theses. Victoria Troland’s master’s thesis, for instance, used INESS to extract syntactic markers from syntactically analysed Norwegian novels and subsequently used these markers as a basis for an author identification model. To teach linguists how to query treebanks, see the INESS Search Walkthrough and the Parseme tutorial: Studying MWE annotations in treebanks. The CLASSLA K-centre provides expertise and training on developing language resources and technologies for South Slavic Languages, including Slovenian, Slovene, Croatian, Bosnian, Serbian, Montenegrin, Macedonian, and Bulgarian. The platform gives access to research and tools for language processing, such as information extraction, language understanding, named entity recognition, processing of morphologically rich languages and speech recognition. The centre is managed by CLARIN.SI, the Institute of Croatian Language and Linguistics, and CLADA.BG. Moreover, it offers documentation on how to use the CLARIN.SI infrastructure in Slovene, Croatian and Serbian. A detailed description of the centre is available in Tour de CLARIN. Teachers and researchers of bilingual language development and sign language are referred to the CLARIN K-centre for Atypical Communication Expertise (ACE), hosted by Radboud University in Nijmegen. Internationally, the centre is linked to the DELAD task force, an initiative that provides guidelines for data acquisition, processing and sharing of corpora and datasets that contain sensitive data (e.g. speech, audio and transcripts collected from people with language disorders). Moreover, the centre collaborates closely with both the Language Archive and the TalkBank repositories to host and give access to corpora of speech disorders securely and in a GDPR-compliant way. Educators can find some examples of well-documented speech corpora on the K-centre website, which can be used to teach students how to analyse disordered speech. Conversely, researchers can use the available corpora to refine the analysis methods and formulate and test hypotheses. For teaching and learning materials related to the impact of GDPR on language research and how to handle sensitive research data collected from human subjects, see this impact story: Navigating GDPR with Innovative Educational Materials. Students and researchers working with patient data learn how to perform a Data Protection Impact Assessment (DPIA) through role plays and use cases. 📖 Teaching and Learning Resources on Moodle To make students aware of key ethical issues in data collection and sharing of pathological speech data, see the guidelines on the DELAD website and use the following tutorial on Moodle: Several universities that are part of the CLARIN-CH consortium, develop language resources, datasets, and tools and provide expertise and training in language sciences. Teachers and students, searching for language resources, are referred to the collection of CLARIN-CH Resources and the national Linguistic Corpus Platform hosted by LiRI. Here, we highlight the computer-mediated communication (CMC) corpora collected in the What’s up, Switzerland? project. It contains 617 WhatsApp chats in all four national languages of Switzerland and their varieties, and they are freely available for linguistic research. To learn how to use and query the corpus, see the project website: https://whatsup.linguistik.uzh.ch/start. This project is also a good example for students and researchers who want to learn how to process, handle and annotate CMC corpora. blank

Austria

Germany

Italy

Norway

👉 PRO-TIP: To learn how to query linguistically annotated corpora, see Kuebler, S., & H. Zinsmeister. (2014). Corpus Linguistics and Linguistically Annotated Corpora, London: Bloomsbury. Retrieved from https://ebookcentral.proquest.com/lib/uunl/detail.action?docID=1840024

South-Slavic Countries

👉 PRO-TIP: In June 2023, the centre launched CLASSLA-web , a new collection of large web corpora for Slovenian, Croatian and Serbian, which can be queried using the CLARIN.SI concordancers (noSketch engine). See this tutorial to learn how to query these corpora.

The Netherlands

👉 PRO-TIP: For examples of information sheets and consent forms for collecting speech data from children, download the templates from the DELAD website.

Switzerland

| 👉 PRO-TIP: Please remember that even if a country is not a member of CLARIN, teachers, students and researchers can still benefit from all the resources and tools hosted by other national repositories that are publicly available. |

To summarise this section, teachers can use the CLARIN local networks to:

- Contact the CLARIN National Coordinator and/or national helpdesks to learn more about what CLARIN has to offer in a specific country;

- Contact a K-centre to find support for a university course/programme, check for training opportunities and seek guidance in getting access to the resources and tools in their country;

- Apply for a mobility grant to visit a centre or set up a teacher exchange and training programme;

- Search for other funding opportunities, e.g. to organise events or train-the-trainer workshops.

| 👉 PRO-TIP: More information about the CLARIN K-Centres is available in Tour de CLARIN and Impact Stories, which showcase innovative research and educational projects. |

“The integration of infrastructures into teaching should be seen more as a journey, rather than a goal in itself.” (Vesna Lušiky, Lecturer in Translation Studies, University of Vienna)

When creating a research-based course in linguistics and language-related disciplines, extensive planning and research are necessary to ensure that the course meets students’ needs and achieves desired learning outcomes. It is crucial to access research infrastructures, repositories, and language resources that teachers and students can freely use to collect, process, analyse, and deposit language data in the classroom. Free resources and tools can be used to design an engaging, and practical hands-on curriculum.

This part of our guide showcases how CLARIN core services, collections of open corpora and online natural processing tools can be used to enhance the teaching of language data discovery, analysis, and archiving skills. As corpora are one of CLARIN’s most valuable language resources, we begin with general recommendations for teachers who consider integrating data-driven learning and corpus-based pedagogy in language-related disciplines. We continue with a brief overview of the CLARIN central services for data discovery, analysis and processing, which are presented in more detail through quick step-by-step guides and references to additional teaching and learning content on Moodle. Finally, we show how to find natural language processing tools in the infrastructure that are often used or may be suitable to explore in educational settings.

The insights collected from the lecturers participating in the UPSKILLS events revealed that the successful implementation of infrastructures, language resources and tools in linguistics and language-related programmes depends on several factors:

-

- Teachers’ own perception, attitude, and confidence in applying data-driven learning and corpus technology tools in the classroom;

- Students’ background and their study load;

- The flexibility of the curriculum.

Hence, this section provides general recommendations on how teachers can improve their perception and skills in applying corpus-based pedagogy and technologies in the classroom through the CLARIN knowledge infrastructure and wider linguistic community. Teaching assistants and trainers who never used corpora in teaching may find this section useful.

One of CLARIN’s most essential language resources are corpora of various types, modalities and languages. Corpora are often used to answer research questions in both social sciences and humanities domains (McCarthy & O’Keefe, 2010), and in data-driven learning (DDL; Johns, 1991) to encourage students to analyse corpus data independently using corpus query platforms, create hypotheses, identify linguistic patterns and formulate rules, and verify the validity of grammatical rules. DDL can benefit, for example, foreign language learners by providing them with access to corpora containing authentic texts and user-friendly tools to explore linguistic patterns and trends in language use and reach their own conclusions (Bernardini, 2002; Boulton, 2009 & 2017). This approach gives students autonomy over their learning process, helps them develop critical thinking skills and turns them into “researchers”. According to the literature (Boulton 2009; Gilquin & Granger, 2010), data-driven learning based on corpora has not yet been widely adopted in some language-related programmes because teachers are often unaware of the benefits of using corpora for pedagogical purposes. Second, teachers need a high level of corpus literacy (Mukherjee, 2006) to be able to develop corpus-based pedagogy (CBP), “the ability to integrate corpus linguistics technology into classroom language pedagogy to facilitate language teaching’’ (Ma et al., 2021, p 2). Furthermore, teachers need to learn to switch to a “less central role’’ in the classroom than in traditional teaching and guide the students through the learning process (Gilquin and Granger, 2010). Finally, DDL may not suit all learner types because it requires certain technical expertise to work with the corpus technologies. It can also be time-consuming, as learners need to analyse concordance results and draw their own conclusions. While students appreciate the use of corpus concordancers in the translation classroom, it may take a long time to teach them how to collect enough evidence to be able to make generalisations. Students may also find it difficult to understand how to transform knowledge from another language to another, connect several language resources (e.g. WordNet vs Valency Lexicon), apply linguistic tests, and make decisions regarding translation equivalents. (Petya Osenova, Professor and Researcher in Syntax, Morphology and Corpus Linguistics, Faculty of Slavonic Languages, St. Kl. Ohridski University, Sofia, Bulgaria) Teachers who never used corpora and corpus technologies in the classroom are recommended to first delve into the literature to understand whether incorporating corpus-based pedagogy (CBP) and data-driven learning in their curriculum would benefit their specific linguistic sub-discipline, teaching style and the students’ background, level and learning style. A good literature review of how corpora are used as a pedagogical tool in various areas of linguistics, see “Part III. Corpora, language pedagogy and language acquisition” in the Routledge Handbook of Corpus Linguistics (O'Keeffe & McCarthy, 2022). Other scenarios in which corpora are used in educational settings are teaching corpus linguistics as an academic subject, using corpora to inform syllabus design and development of educational resources (e.g. dictionaries and grammars) and involving students in the development of language resources (collect, design, and compile corpora) (Cheng & Lam, 2022). Finally, students can also be taught how to share and archive a corpus at the end of the project, solving all the issues related to handling personal and sensitive data. See Section 6 in the Moodle UPSKILLS learning content, Introduction to Language Data: Standards and Repositories. References: Before integrating corpora or any other language resource or tools in the classroom, teachers should first gain some hands-on experience themselves. For example, learn basic methods in corpus linguistics, different types of corpora and corpus technologies, how to extract relevant data from corpora, count occurrences of phenomena, and do statistical analyses (Lin 2019). Some of the active learning content developed in UPSKILLS and available on Moodle can be a good starting point: Besides proficient use of corpora and corpus technology, teachers will also need pedagogical knowledge to design corpus-based activities and assessments that match their course's overall goals and students' learning needs (Ma et al., 2021). As practice shows, research is often prioritised over teaching, with lecturers having excellent research skills but poor pedagogical skills (van Dijk et al., 2020). Below, we recommend established workshops, summer schools and free online courses, which could help teachers build up both their technical and pedagogical skills: Finally, we also recommend keeping an eye on the upcoming CLARIN workshops, which aim to educate and train various stakeholders on the use of corpora and other technologies. References: Sometimes, classes in Applied Linguistics, such as Corpus Linguistics and Computational Linguistics, and Translation Studies consist of students with mixed backgrounds (language and humanities vs. computer scientists) and coming from different countries. Such classes pose additional challenges in teaching and learning because the teacher needs to find ways to motivate language and humanities students to work with computational methods and tools. In contrast, the computer scientists need to learn about linguistics. Furthermore, access to multilingual language data repositories and resources is needed to design learning activities in the students’ preferred languages. For example, the Virtual Language Observatory and CLARIN Resource Families may be useful in multilingual language technology classes because they provide access to different types of corpora and datasets in multiple languages. Therefore, it is important to identify students’ backgrounds, languages, technical skills, research interests and their level of interest in technology to be able to tailor the language resources and research methods chosen for the classroom. If the learning activities cater to the needs of the students’ linguistic concerns or research interests, they will feel more motivated to engage with technology. Whenever possible, classes should be tailored to certain student groups instead of attempting to meet the needs of a varied group of students in each course (Baldridge & Erk, 2008). Try to split the RBT course into feasible portions with clear learning outcomes and consider students’ own interests in making the connections between linguistics and the more technical parts (e.g. programming in Python, data handling). You could start with the linguistic questions, then use technical knowledge to address the questions and translate the answers back into the research domain. (Louis ten Bosch, Associate Professor of Deep Learning and Automatic Speech Recognition, Radboud University, the Netherlands) References: After consolidating their knowledge about scientific research and corpus linguistics, teachers are invited to use this guide and the accompanying course on Moodle, Introduction to Language Data Standards and Repositories, to get acquainted with the CLARIN central services and identify those language resources and tools suitable to include in teaching. The exploration may be easier if it is based on a clear research question, study or project that teachers intend to formulate in the classroom. Consider the type of data the corpus should have, register, languages, size, availability, the time period that the corpus covers, etc. When testing and selecting corpus technology tools, it is recommended to test them properly to identify those functions that will help achieve a specific goal in the classroom. The final choices and implementation of language resources and tools in the classroom are often influenced by teachers’ own perceptions, attitudes, and confidence in applying corpus technology in the classroom (Leńko-Szymańska, A., 2017; Ma et al., 2022). References: Once appropriate resources and tools have been identified for classroom use, the learning outcomes of the course should be adapted in order to target practical skills related to the use of infrastructure, create and curate learning materials and design corpus-based activities and assessments. To save time and effort, teachers are recommended to pick and choose units of individual learning activities from the UPSLILLS Moodle platform, build on them and share them with their students. Instructions on how to export and reuse learning content are included within each learning block. When creating learning content for technical-oriented tasks and using different tools, remember that tools change rapidly, so updating the content will require time and effort. Therefore, to save time, consider reusing tutorials provided by the infrastructure and tool providers, and adapting them to match the students’ learning objectives and levels. If learning materials need to be created from scratch, the focus should be on making them as modular as possible so that other teachers can easily update and reuse them. Moreover, in multidisciplinary courses, learners come from different fields and backgrounds, and some may not yet have all the required skills. This can place an extra burden on the teacher. In online teaching, the issue might be partially solved by creating small, self-contained and well-described learning objects that can be used flexibly in many different courses and replaced or removed when the content becomes obsolete. Effective planning is crucial when introducing corpora in the classroom. We have compiled a general framework of helpful teaching strategies from Sripicharn (2010), Tribble (2010), and Lessard-Clouston & Chang (2014) that can be referenced when starting to teach with corpora regardless of your linguistic sub-discipline. After students have increased their corpus literacy skills, they can be introduced to data-driven learning by challenging them to work on a small-scale research project and answer a specific research question. Bennett (2010) proposes a general framework for using corpora in language teaching, similar to the one above but starting from a research question. According to lecturers’ testimonials in Simonovic et al. (2023), using technology in the classroom (even when starting from a simple task, such as organising data in an Excel file) can disrupt students’ learning experience when unexpected technical issues arise. Students will need guidance and support throughout the course. For example, more advanced students may be affected if the lecturer needs to invest more time in guiding those students who lack basic scientific knowledge and technical skills. A more interdisciplinary approach to teaching language and linguistics could help tackle such issues. For example, students could be recommended to follow computer science courses, while teachers could create joint projects and assignments to ease their workload. However, if such an interdisciplinary approach is not possible in your programme, here are a few practical tips. Tool handouts, video tutorials, and a pre-defined folder structure for file organisation can help minimise technical disruptions during class. Collaborative online environments like Google Drive, Colab, GitHub, or Open Science Forum (OSF), containing all the files and instructions for the class, can help increase teaching efficiency and minimise disruptions. Additionally, creating a forum within the learning management system (such as Moodle or Blackboard) can assist in reducing the teacher's workload by allowing students to interact with their peers and ask questions about any technical difficulties they may face while completing homework or class assignments. This approach promotes independent problem-solving skills among students and only requires teacher intervention when necessary. References: Undertaking and monitoring research projects involving language resources can be challenging and require continuous guidance and feedback to help students improve their work. With many changes that may occur during the research process, it is easy to get lost and confused. However, keeping track of information at different stages can help clarify ideas, resulting in faster progress. In line with this, our consortium partners from the University of Zurich have developed an interactive research tracking tool that enables students to track their progress during projects. Students fill in the template using the student version of the tool. Teachers then use the teacher's version of the tool to provide brief feedback in the designated field. At the project's onset, it is up to the teachers and students to agree on the reporting frequency. Each submitted report should receive short feedback from the teacher. The preferred sharing mode (email, online drive, etc.) will also be decided. The research tracker can be downloaded from the landing page of this guide. References: Language resources and technologies can support teaching and research across various disciplines to teach students how to develop a data-driven mindset and draw insights and conclusions from large volumes of text. While testing and using various language resources and tools corpus classroom use, collecting feedback from students on the usability of the resources, tools and infrastructure and their usefulness in educational settings is beneficial. For example, Lusicky and Wissik (2016) evaluated the usability of language resources like corpora and translation memories, disseminated through research data catalogues such as Virtual Language Observatory, Meta-Share, and ELRA, for translation studies scholars and students. According to the authors, repositories can help researchers make their language resources more FAIR and meaningful for translation studies by including specific metadata catering to the field. For instance, in the case of parallel corpora, it is essential to know the original language, the reliability of the source texts, whether the translation was obtained via post-editing machine-translation output, as well as the names of the translators and their native languages. The following criteria could be used to evaluate your own experience with the tools and also collect insights from students. All the feedback, suggestions for improvements or additional features could then be shared with the infrastructure and tools developers to improve the subsequent versions of their tools. See the testimonials below as an example: The language resources available via the CLARIN infrastructure are very important for my teaching. It is vital that the same materials are persistently available and that they can be cited consistently. I try to teach first-year students to search for corpora and other resources that can be relevant to them via CLARIN platforms, and the more advanced students can benefit from tools, good practices and guidelines that help them to process and make available the resources they create. Praat and ELAN have been around for a long time, and teachers can be confident they will remain accessible in the coming years. University students need good examples of reproducibility, scientific references and citation practices for tools and data since these will be the building blocks they will work with in the future. (Mietta Lennes, Lecturer of Speech Technologies, University of Helsinki) The students perceive corpora as something entirely new, they tend to get scared initially and need some time to familiarise themselves with different types of queries, and they tend to struggle with regex. But these challenges can be overcome. (Anonymous lecturer, UPSKILLS Questionnaire of Lecturers) References:blank

1. Explore best practices in data-driven learning & corpus pedagogy

2. Build up your corpus literacy and pedagogical knowledge

3. Know your students

4. Identify and select language resources and tools

5. Curate, adapt or create learning content

6. Teaching in the classroom

👉 PRO-TIP: A good overview of corpus-based activities per linguistic sub-discipline is available in the Routledge Handbook of Corpus Linguistics (O'Keeffe & McCarthy 2022).

👉 PRO-TIP: See the student projects that the UPSKILLS consortium partners and CLARIN jointly developed in the Processing Texts and Corpora and Introduction to Language Data: Standards and Repositories on Moodle.

7. Tracking research projects

To learn more about student project design and reporting formats, please see the Guidelines for the Students' Projects and Research Reporting Formats (Simonović, M. et al., 2023).

8. Evaluate and share your experience

Teachers can use the CLARIN central services in the classroom to teach language data discovery, (re)use, sharing, citing and archiving.

-

- Use the Virtual Language Observatory (VLO) to search for full-text language resources of different types, languages, modalities, time periods, formats and licences.

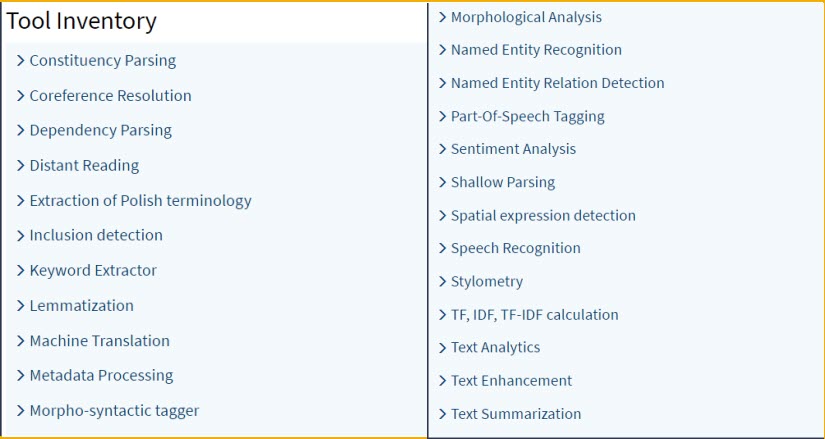

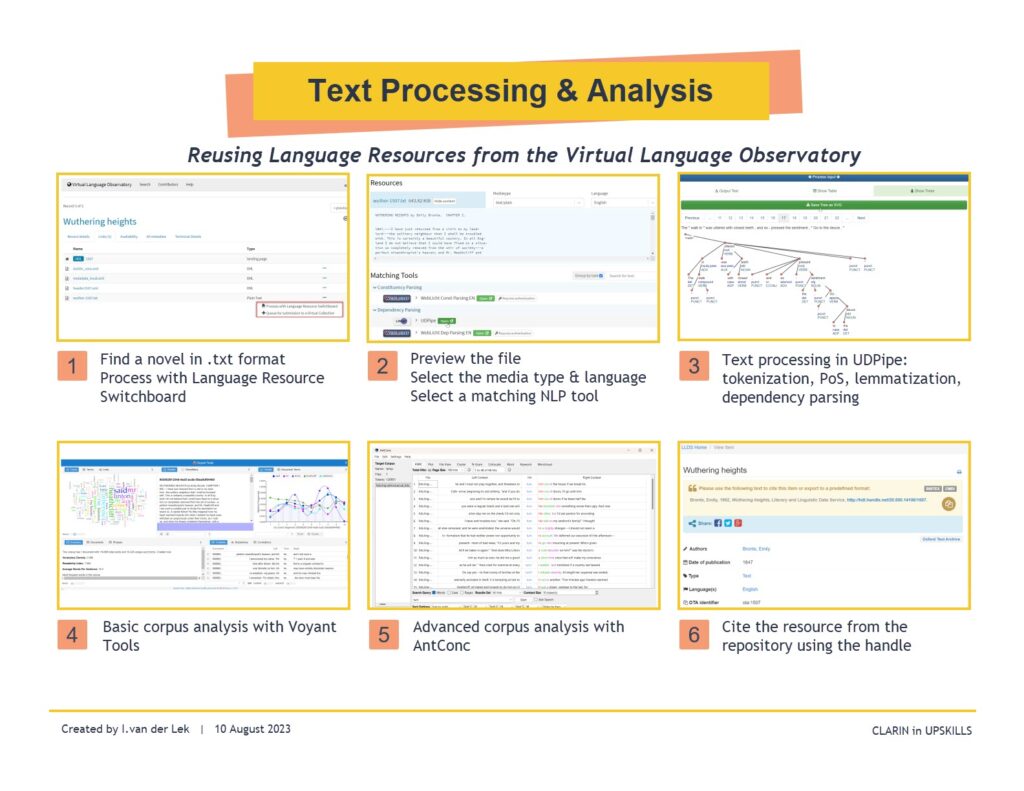

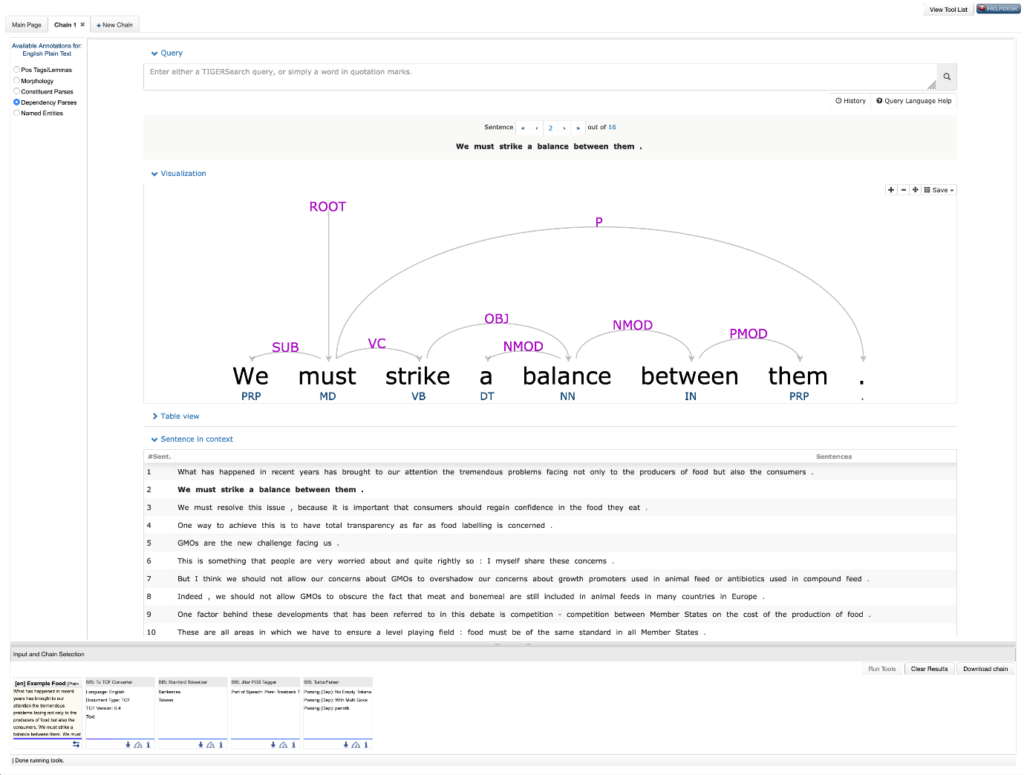

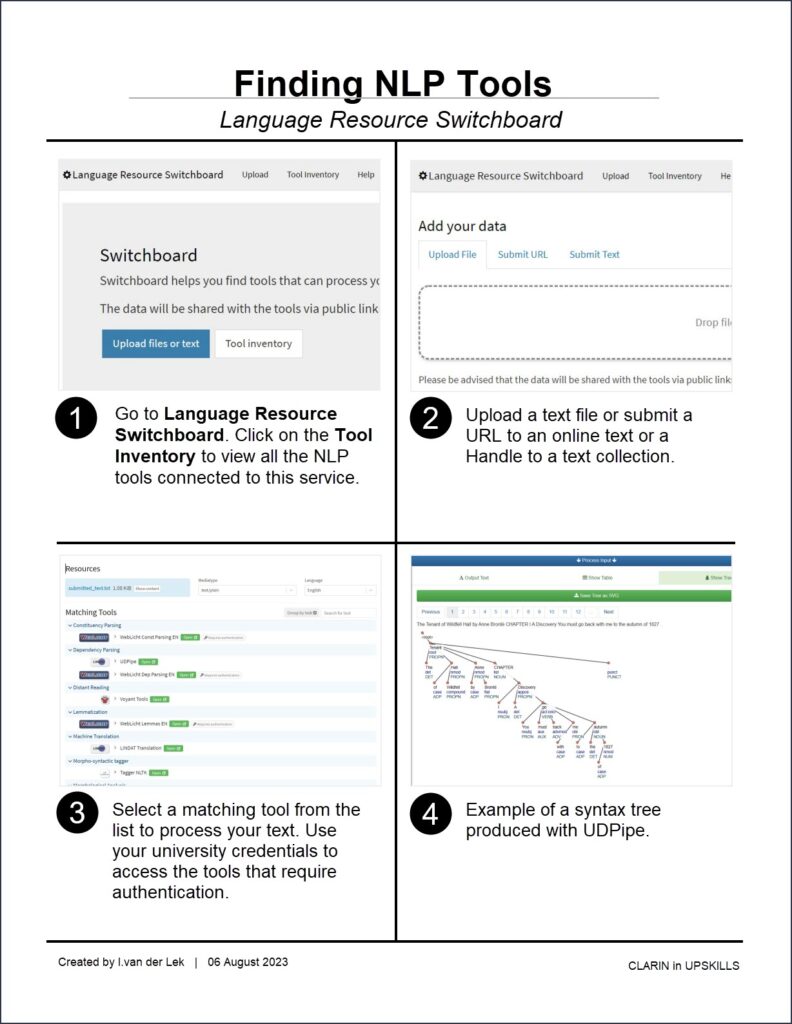

- Find a matching natural language processing tool via Language Resource Switchboard (LRS) to process language resources or texts and perform more advanced linguistics tasks, such as different types of automatic annotation, morphological analysis, distant reading, terminology and keywords extractions, topic modelling, etc.

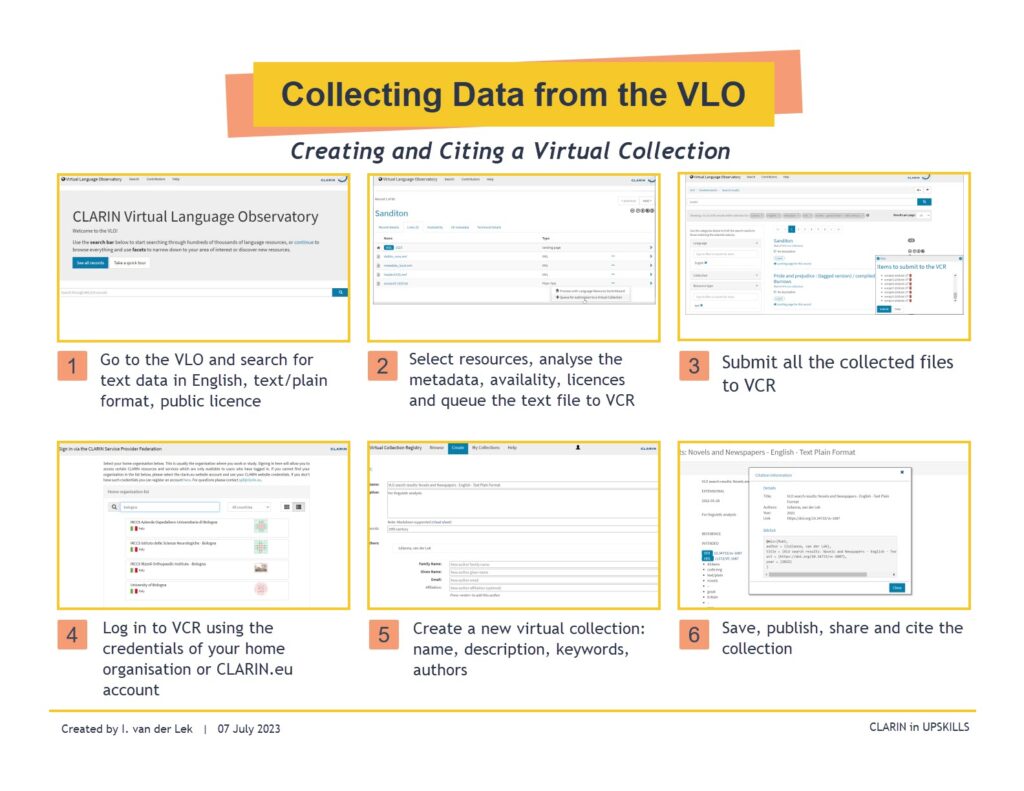

- Collect the resources discovered in the VLO or any other research data repository in a virtual collection in the Virtual Collection Registry (VCR) that can be cited and shared with other teachers or students. This service allows users to save resources for later exploration and processing. In contrast with Zotero or Zenodo repositories, the VCR allows you to add multiple resources to a collection and cite the entire collection using persistent identifiers, such as handle or DOI.

- Use the Federated Content Search to search for specific linguistic patterns across several collections of corpora in several repositories simultaneously. You can use CQL for queries and download the search results in different formats.

- Search for corpora for specific registers and languages in the Language Resource Families. Most corpora are freely available, can be cited, and downloaded from the repository where it is located. Some corpora are directly available for query in online concordancers, such as KonText, Korp and NoSketchEngine.

- Language resources created collaboratively as part of a research-based project or in the context of a thesis can be deposited, shared and archived through a suitable CLARIN repository. The repositories adhere to the FAIR guiding principles for research data management and sharing. See the depositing services for general depositing guidelines and an overview of the centres providing support in this process.

If you have never used CLARIN before, watch the video below to learn how the Virtual Language Observatory and the Language Resource Switchboard are integrated to enable language resource discovery and reuse for research purposes:

| 👉 PRO-TIP: To help you evaluate the suitability of the CLARIN infrastructure (or any other infrastructure) for teaching language and linguistic research, we recommend first exploring and testing the core services, some tools and language resources yourself with the help of this guide and accompanying learning content on Moodle, Introduction to Language Data: Standards and Repositories, especially Unit 3: Finding and (Re) Using Language Resources in CLARIN Repositories. |

This section presents the CLARIN central services, which teachers and students can use in the planning and data collection phases of a research project to search and locate language resources that can help answer specific research questions, replicate a dataset, build a corpus, or train a language model. The central services for data discovery are the Virtual Language Observatory, Federated Content Search, Resource Families, and the Virtual Collection Registry. While many language resources are accessible through CLARIN, we will mainly demonstrate how to search, locate and use corpora of different types, languages and modalities.

After getting acquainted with the basic functionalities of each service and understanding what they can be used for, try to test it by formulating a research question for a specific register and language (s). This would make the searches more focused and identifying appropriate corpora and tools easier.

|

📖 Teaching and Learning Resources on Moodle

|

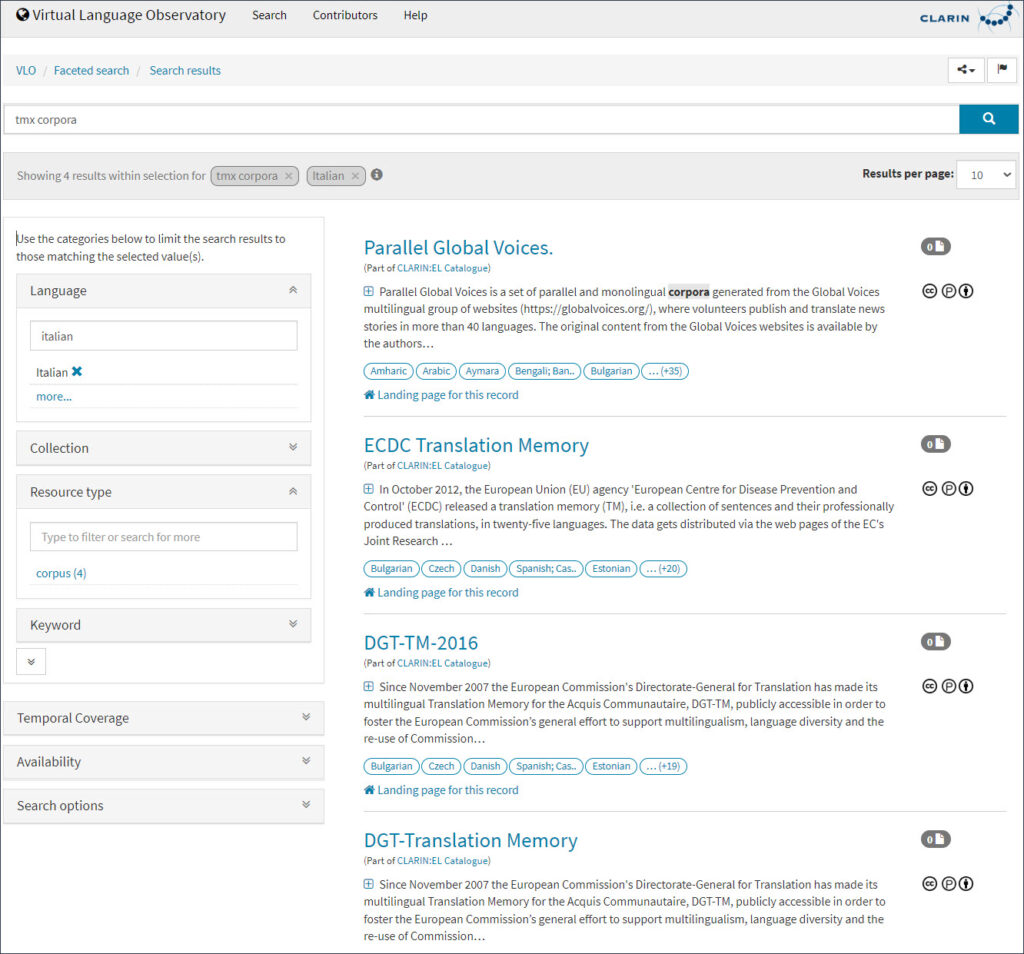

The Virtual Language Observatory (VLO) central catalogue automatically harvests metadata on language resources contributed by researchers in CLARIN member and observer countries. It offers advanced search functionalities that facilitate the easy discovery of language resources, such as corpora, lexica, grammars, multimedia recordings, digitised texts such as books, articles, and transcripts of parliamentary debates, software & web applications, and even training materials.

Faceted Search in the VLO

Because of the large amount of data, there are multiple ways of exploring the VLO, e.g., full-text search, facet browsing, or geographic overlay. The advanced filters can help narrow down the search results and find resources or text collections in a specific language, resource type (text, audio, dataset, corpus, software, video etc.), modality (spoken, writing), format (text, audio, image, specific keywords), temporal coverage or availability (for public, academic, restricted use).

When searching for resources, remember that the search results might contain duplicate entries, incorrect or incomplete titles/descriptions. The flag icon on the top right corner can be used to report issues via the VLO feedback form. The service is continuously improved to facilitate easy data discovery.

Each resource in the VLO can be accessed directly via the unique/persistent identifier, i.e.handle, pointing to the landing page of the repository where the resource creator initially deposited the resource. Teach the students to use this handle to reference the landing page online and in their publications. CLARIN endorses the Data Citation Principles.

| 👉 PRO-TIP: If you are unfamiliar with the current practices in citing language data, see Unit 4 of our Introduction to Language Data: Standards and Repositories on Moodle: Citing Language and Linguistic Data. |

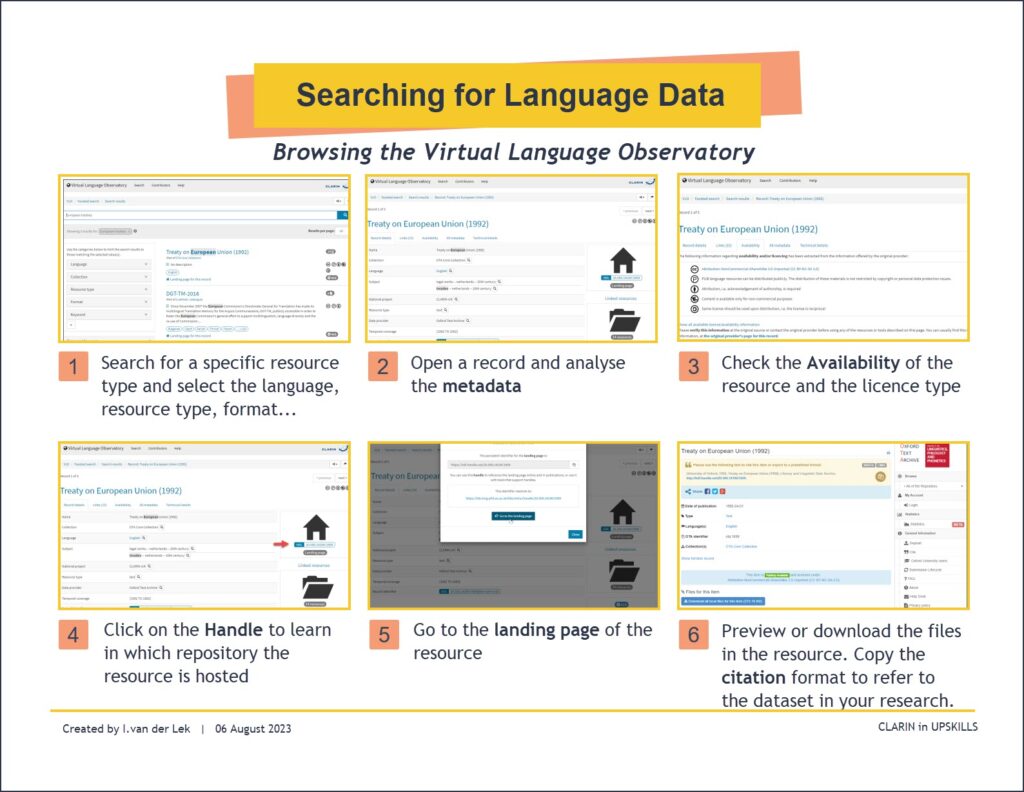

Assuming you are searching for a digitalised text collection of European treaties to build a corpus, go to the VLO and perform the steps described in the quick guide below. Was it easy to use, find and access the Treaty of European Union collection?

Searching for Language Data in the Virtual Language Observatory

When you find a language resource in the VLO that might be interesting to explore for research or teaching, carefully read and interpret the metadata fields used to describe the resource, especially the record details, links and their availability. Each resource is described based on metadata standards (e.g. CMDI, Dublin Core, OLAC), which provide helpful information about who created the resource and how. It is also advisable to review the metadata of the resource from the perspective of your specific linguistic sub-discipline. For instance, a study conducted by Lusicky & Wissik (2016), mentioned earlier, assessed the usability of language resources such as corpora, translation memories, terminology resources, and lexica from the VLO and other research data repositories (META-SHARE, ELRA) for translation studies scholars and students.

Further, the VLO records contain information about the licence assigned to the resource and terms and conditions of use. Although CLARIN advocates for open science and access, data creators may restrict access to data collections due to sensitive data. It is important to note that resources and tools in CLARIN are assigned either a public (PUB), academic (ACA) or restricted (RES) licence. Public resources can be reused without copyright restrictions, whereas academic or restricted resources can only be used under specific conditions.

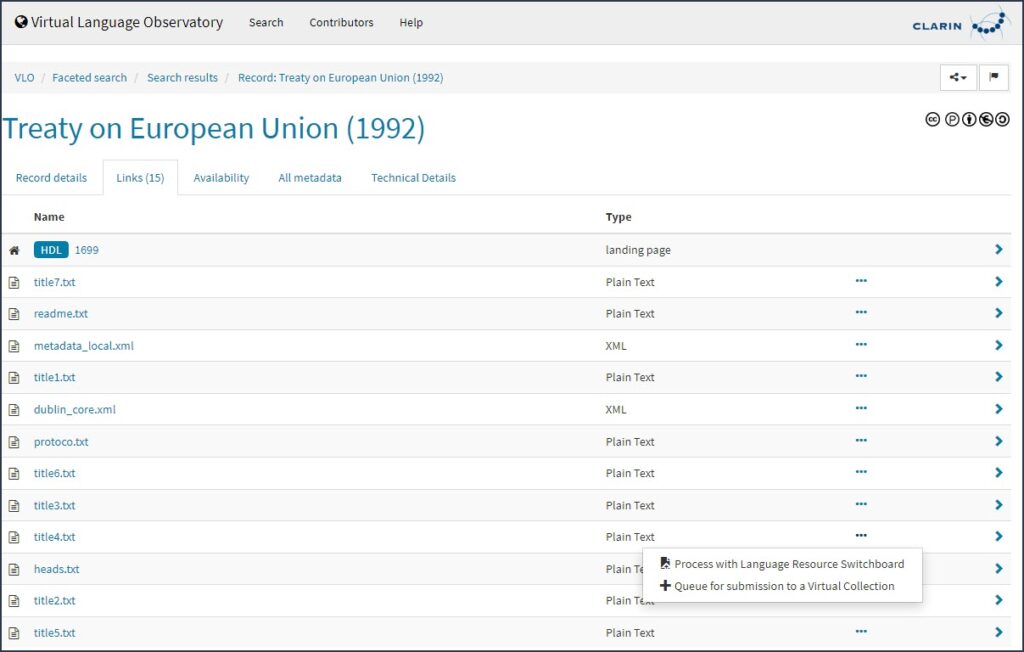

Finally, the digital text collections in the VLO can be processed and analysed with integrated Natural Processing Tools. For example, suppose you find a resource in the VLO in plain text format. You can process it directly using one of the Language Resource Switchboard tools, e.g. use WebLicht to annotate the plain text file automatically, use UDPipe to produce syntax trees or use the LINDAT machine translation service to translate the file into another language. You will learn more about Switchboard in Section 4.2. Data Processing and Analysis. If you do not want to process the file immediately, you can choose to queue it for submission to a Virtual Collection. The following figure shows how to access the Switchboard and Virtual Collection Registry directly from the Links area of the VLO record.

Process the text file with Switchboard or send it to a Virtual Collection

The metadata quality of language resources and their suitability for educational settings can be evaluated through a simple checklist, e.g.,

-

- Does the language resource provide sufficient metadata to help you decide to use it in your research and/or teaching? If used for research purposes, does it sufficiently address the research problems?

- Who created the resource, and when?

- Is it reliable? How often has the resource been viewed and/or downloaded by others (researchers, teachers, students)?

- What type of language data has the resource creator collected, and from whom, when and where? How was the data collected and processed?

- Does the resource creator provide any information about how the resource can be used in either research or teaching?

- In what format the data and metadata are available? Are the files in the resource available in a common format compatible with other tools?

- Does the repository provider offer an online service to help you look inside the language resources/ dataset?

- If the infrastructure does not provide any tools to preview or explore the contents of the resource, can you and your students download it and use it for processing and analysis in other tools without restrictions?

- Do you and your students need special software skills to process and analyse the dataset? If you lack the skills to process a large dataset, you can try to contact the data provider or the research data management department at your university and ask for help.

After the students learn to evaluate resources and their metadata properly, teach them how to use them in the tools available through the infrastructures or other preferred linguistic tools.

|

📖 Teaching and Learning Resources on Moodle Introduction to Language Data: Standards and Repositories

|

References:

- Andreassen, H. (2019, March 04). The acquisition of definiteness: Analysis of child language data. OER Commons.

- Lušicky, V., & T. Wissik, T. (2016). Evaluation of CLARIN services, user requirements, usability, VLO, and translation studies. In Selected Papers from the CLARIN Annual Conference 2016, Aix-en-Provence, France, pp. 63-75. Linköping University Electronic Press, Linköpings universitet.

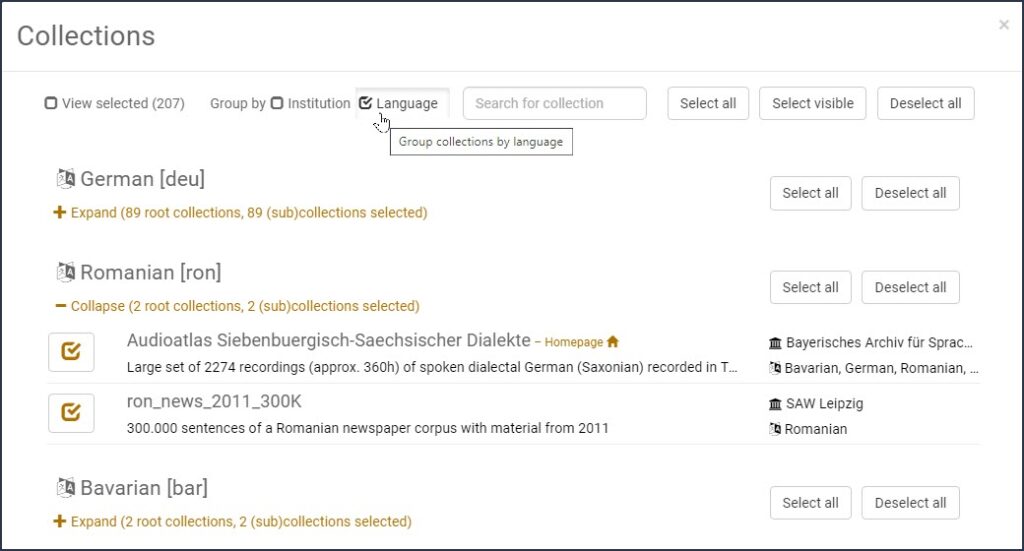

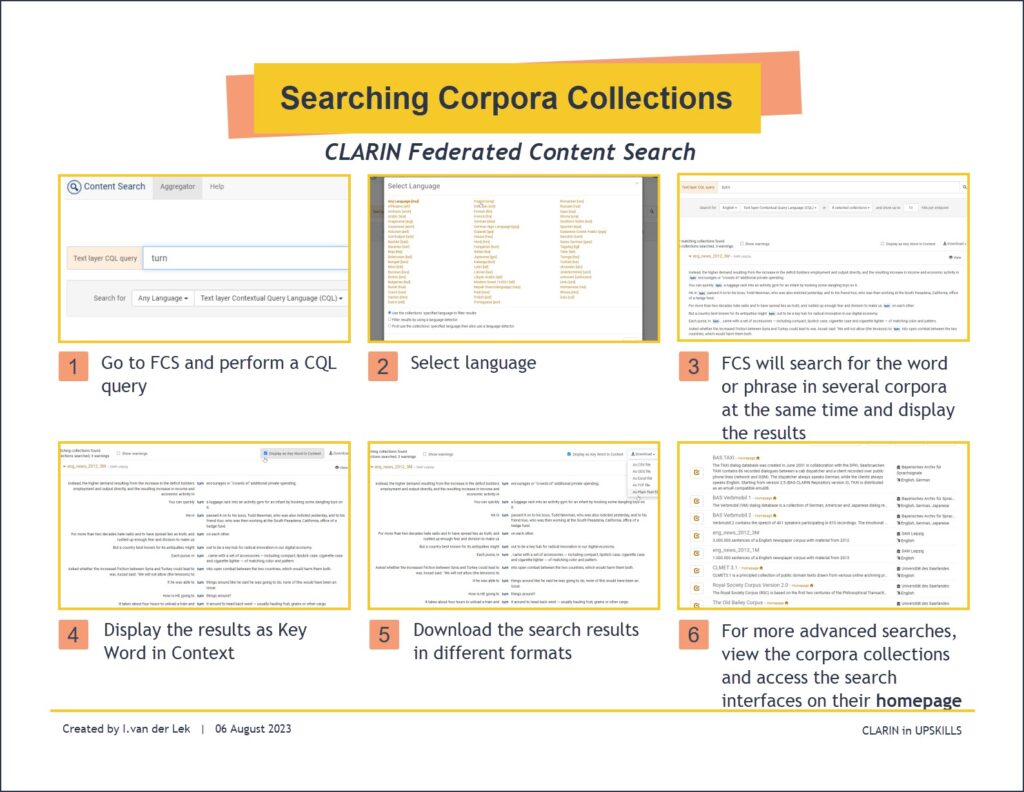

While the VLO allows only metadata searches to locate full language resources and texts, one can use the Federated Content Search (FCS) to identify specific linguistic patterns (e.g. collocations) across various corpora hosted in different CLARIN centres simultaneously. The corpora stay at the centre where they are hosted; therefore, the underlying technique is called federated content search. As of April 2023, there are 207 corpora searchable via FCS in various languages.

Collections of corpora browsable in Content Search sorted by language

To view the available corpora per language, go to the Content Search main interface and click on Collections. Then, tick the Language box to group the collections per language, as in the figure above. Finally, click the + sign to expand and view the corpora available for a specific language. Monolingual searches can be performed with the help of integrated Contextual Query Language (CQL) queries. The search results can be displayed as Keywords in Context and downloaded in various file formats.

Use the steps described in the following quick guide to learn how to use the FCS service. You can, for example, search for collocations in a specific language and export the results.

Searching corpora collections through Federated Content Search

| 👉 PRO-TIP: Although the service offers basic functionalities, it can help teach students basic corpus query searches to investigate how certain words and phrases are used in context. To perform more sophisticated queries, view the collections of corpora available through the FCS service and go to the search interface of the centre hosting the text collection. |

Research has demonstrated that scholars, instructors, and learners have used corpora for “data-driven learning” (Bernardini, 2006) to examine genuine language usage, contextual subtleties, and actual linguistic variations, allowing them to make generalisations about language use. Teachers who already use corpora in their own research and/or teaching methods may already have one or more preferred corpora and corpus analysis tools they are accustomed to using in the classroom.

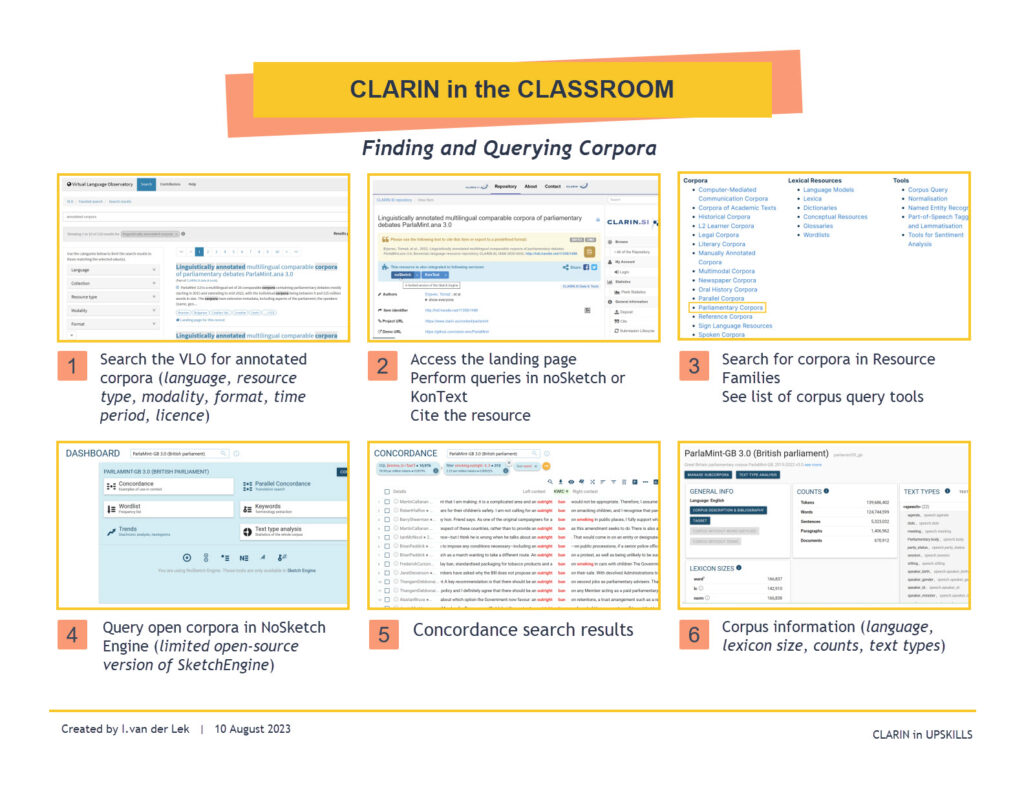

For those considering to integrate corpora into their course or programme, the CLARIN Resource Families can be an invaluable starting point. The families include various corpus types (and tools), each catering to specific linguistic or research needs (see below image), and are meant to facilitate comparative research. Additionally, the listings in each resource family are sorted by language and provide brief overviews about the size of the resource, text sources, data type, time periods, annotation types, standards, formats and licence information. Unlike the Virtual Language Observatory, this overview of corpora collections is much user-friendlier and makes it easier to identify what type of corpora matches the goals of your course/project/assignment.

Finding and Querying Corpora

| 👉 PRO-TIP: If this is your first time working with corpora, see A Practical Handbook of Corpus Linguistics by Paquot & Gries (2020) to understand how different corpus types can be designed, analysed and compiled (see chapters 1 to 3). The handbook can also be used as a course book in the classroom or for individual study. |

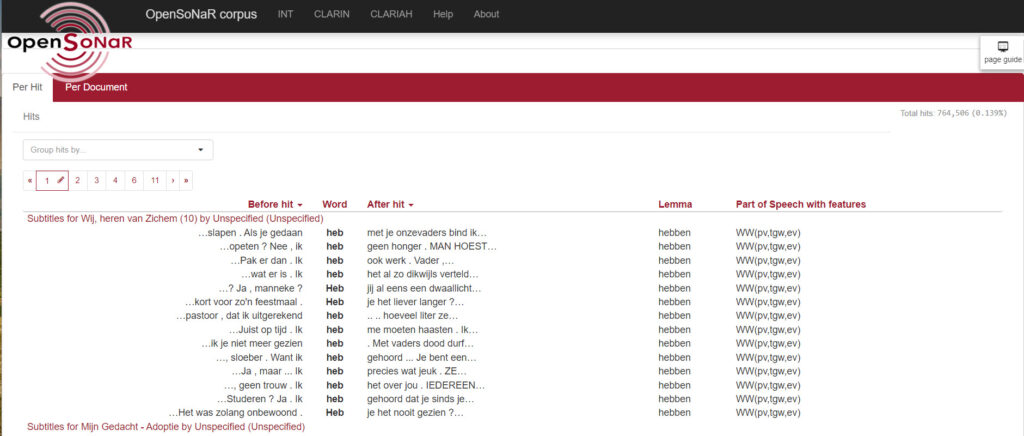

Most importantly, many corpora listed in the resource families are available in open access and can be directly queried in online concordancers, such as Korp, Corpuscle, KonText and noSketchEngine. These concordancers are integrated into the infrastructures of the CLARIN national repositories in Finland, Norway, the Czech Republic and Slovenia, giving access to many corpora developed by researchers in their communities. Moreover, some corpora come with detailed tutorials demonstrating how to use them for research. The tutorials are available in open access, and you can refer the students to follow them independently or adapt them for classroom use. All these aspects make the resource families of corpora a great open educational resource, which can be a valuable addition to the teachers’ toolbox, especially in the era of hybrid learning and teaching.

Nevertheless, several factors can make locating an appropriate corpus for educational purposes challenging. First, as Deshors (2021) points out, different corpora serve different purposes. While some specialised corpora are more suitable for research (e.g. Louvain International Database of Spoken English), others are used as teaching/learning resources (e.g. BNC, COCA). On the other hand, while larger corpora may provide more comprehensive coverage, more specialised corpora may be more suitable to answer more specific research questions. Second, the design and accessibility of a corpus may pose challenges for both teachers and learners.

For example, some studies show that Lextutor, a text-based concordancer, may be too technical for learners. In contrast, AntConc has been found suitable only for adult learners with a high level of English proficiency. Other corpus query platforms, such as SketchEngine, are powerful and user-friendly but available only via subscription, which could be a financial barrier for some universities. Finally, learning how to effectively use corpora in the classroom and design corpus-based materials to fit specific teaching objectives and students’ levels of digital literacy can be challenging and time-consuming.

To help with these challenges, we have gathered several samples of CLARIN corpora and tools currently used in teaching and training by UPSKILLS consortium partners and other educators within the CLARIN community. Furthermore, we have provided links to available tutorials that can be repurposed for classroom use.